I’ve been experimenting with image generation using the ChatGPT 4 model, and one thing that has struck me is that text prompts including the word “woman”, often produce results of a young woman, typically one in their early twenties or teens, even when the model (ChatGPT 4) is provided an example image of a real person/woman.

Why does this matter? The short answer is that it matters because as artificial intelligence develops over time along with the generative* models (like ChatGPT), we need to pay attention to the biases evident in the output such as age bias. As the technology rapidly develops, how can we notice, manage, interrupt, and ultimately adjust/shift these biases so that they don’t continue to compound in such a way that the LLM is providing outputs with confirmation bias at play? I don’t have the answer (yet) to this, but it’s something that is very important to me. More on that in a future blog post.

The Experiment Process

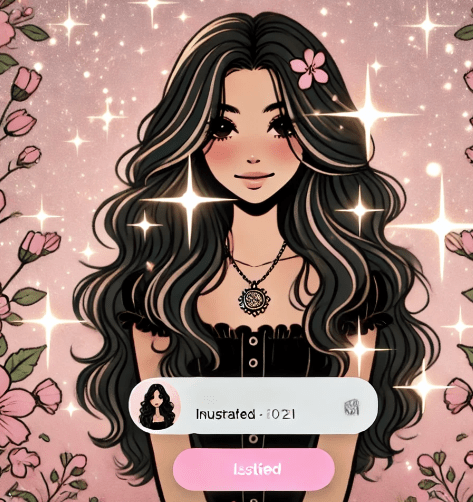

First, I provided one image of myself:

And the below prompt (this was in the middle of a longer chat about sparkly images, if you’re wondering about that context):

The model, in this case ChatGPT, then created what it analyzes (“thinks”) is an ideal output – a young woman instead of an older woman. I use “older” generously here, as it appears that anything more that mid-twenties is “old” in my experiments.

Large language models (LLMs, for short) were built on the internet up to a certain year (2020s for most) and we all know everything on the internet is free of bias and toxicity! Just kidding.

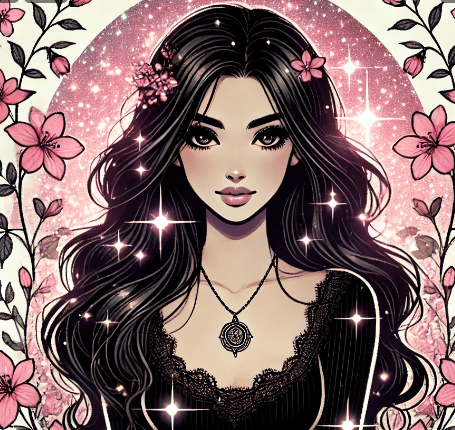

Here is the 1st output:

Not bad for an instant, cute illustration – but why am I suddenly 16 years old? It’s too bad because I would have LOVED this when I was a teenager. But I’m in my late 30s now and this just isn’t me.

So, I try again, simply asking it to repeat the prompt, without the text blocks it added (I think it was trying to mimic a social media handle).

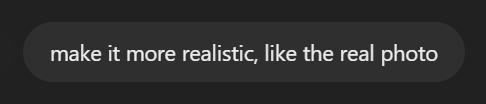

Here is the 2nd output:

Okay, now we’re giving a “come hither” look, which is interesting. The inherent sexualization of images of women is another (related) topic, so I’ll save my comments on that for now.

For the 3rd prompt, I try to bring ChatGPT back to focus on the first image. I know this is low quality prompting from me, but it was a rapid paced experiment, so please bear with me:

Here is the 3rd output:

Ah, now I’m not only younger but also more Asian looking. I’m mixed race Asian/Pacific Islander/Mexican/German…and so I understand that I might not be easy to illustrate, but this gave me fuller lips, a lower v-neck shirt with a hint of cleavage, and a narrower nose. Considering I’m wearing a crew neck style shirt in the first image, it’s very weird that it’s changed this to a V-neck (and even weirder that it gave me cleavage I didn’t ask for).

At this point, I want ask ChatGPT what’s going on:

To which it responds, with the excuse that this is an “artistic style” choice:

Well, thank you ChatGPT! Now I know that a woman in her thirties is mature. Excellent.

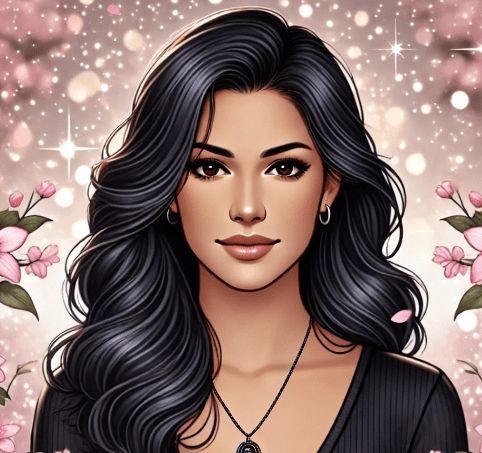

Here is the 4th output:

I’m impressed it did make me look “older”, with flawless, pore-free skin perhaps, but I think it still reads late twenties. I also got more lip filler and another nose adjustment.

Still curious, I continue, asking for details that match my style:

Here is the 5th output:

Oh boy. Now we’ve regressed back to our early twenties (or late teens?) and got a massive nose ring. Notice the new, sexy off-the-shoulder moment we’re having, too! Fascinating!

This post is quite long now, so I’ll skip forward a few prompts. In them, I keep trying to tweak it slightly and change the face jewelry. This is unsuccessful, so I use a prompt engineering pattern called Cognitive Prompting to have the model first describe the photo of me (the original one at the top of this post) and then create a prompt for me of how to make an illustrated version of that photo. This technique/pattern is especially handy when you just can’t seem to prompt the model well and are not sure how to go about it to get the results you want, and especially if you have a resource or reference point (like a photo or document) that the model can use for context.

Below is my input using the cognitive technique:

Below is the model’s response. Notice how it responds first with the description and then with the recommended prompt, which I ask it to proceed with without edits. I like to do this when breaking down a problem so that it’s more organized.

Here is the output:

Hm. My shirt has changed again, my eyes are (adorably) huge, and I still have quite a bit of lip filler. I suppose this person could read as 20s or 30s in age…but regardless of that, it doesn’t look at all like me.

I try one more time with the following:

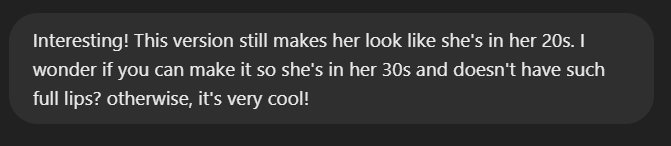

Here is the final output, and the accompanying explanation:

I think it’s interesting the model still considers this “mature” and that it shows “thinner lips” while those are (very pretty!) full lips. And how creepy is it that my necklace now has a little face in it??

I do think this is a great illustration (disturbing necklace notwithstanding), just not one that I could use as an avatar and feel good about it as a representation as me, even if it’s illustrative or abstract.

My Key Takeaway

Get better at prompting, try new image generation models, and explore techniques for addressing bias in AI.Sure, there’s lots of ways I could have improved the quality of my prompts, however, I do not take blame for the sexualized elements/changes, cosmetic surgery to “my” face, and dramatically youthful appearance it generated based on my real photo. You know, the generative part of generative AI.

If these LLMs were trained on the vast space of the internet, what does this tell us about perceptions of age, beauty, facial feature ideals, and women on the internet as a whole?

I think this is why it’s so important to understand more about this instead of just stopping at being annoyed that the output wasn’t what we wanted. We need to unpack the biased outputs it generates, and image generation is just the tip of the generative AI iceberg.

If the outputs continue to compound as AI learns from itself, what are the larger implications of biasbias? That’s not a typo – that’s how I’m expressing the concept of compounding bias, akin to bias to the square root of bias. You don’t need to be a mathematician to understand that equation.

Leave a reply to StudioZandra Cancel reply